Difference between revisions of "Basin modeling: identifying and quantifying significant uncertainties"

| Line 17: | Line 17: | ||

}} | }} | ||

| − | Identifying and quantifying significant uncertainties in basin modeling, 2012, Hicks, P. J. Jr., C. M. Fraticelli, J. D. Shosa, M. J. Hardy, and M. B. Townsley, ''in'' Peters, Kenneth E., David J. Curry, and Marek Kacewicz, eds., Basin modeling: New horizons in research and applications: AAPG Hedberg Series no. 4, p. 207-219. | + | Identifying and quantifying significant uncertainties in basin modeling, 2012, Hicks, P. J. Jr., C. M. Fraticelli, J. D. Shosa, M. J. Hardy, and M. B. Townsley, ''in'' Peters, Kenneth E., David J. Curry, and Marek Kacewicz, eds., Basin modeling: New horizons in research and applications: AAPG Hedberg Series no. 4, p. 207-219. |

| + | |||

| + | |||

| + | |||

Basin modeling is an increasingly important element of exploration, development, and production workflows. Problems addressed with basin models typically include questions regarding burial history, source maturation, hydrocarbon yields (timing and volume), hydrocarbon migration, hydrocarbon type and quality, reservoir quality, and reservoir pressure and temperature prediction for pre–drill analysis. As computing power and software capabilities increase, the size and complexity of basin models also increase. These larger, more complex models address multiple scales (well to basin) and problems of variable intricacy, making it more important than ever to understand how the uncertainties in input parameters affect model results. | Basin modeling is an increasingly important element of exploration, development, and production workflows. Problems addressed with basin models typically include questions regarding burial history, source maturation, hydrocarbon yields (timing and volume), hydrocarbon migration, hydrocarbon type and quality, reservoir quality, and reservoir pressure and temperature prediction for pre–drill analysis. As computing power and software capabilities increase, the size and complexity of basin models also increase. These larger, more complex models address multiple scales (well to basin) and problems of variable intricacy, making it more important than ever to understand how the uncertainties in input parameters affect model results. | ||

| Line 76: | Line 79: | ||

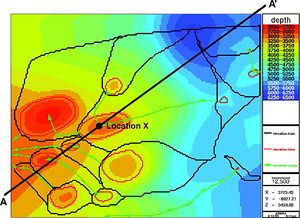

[[File:H4CH12FG1.JPG|thumb|300px|{{figure number|1}}Illustration of the importance of considering uncertainty in an analysis. The "High Most Likely" case (green) has a most likely charge greater than the minimum and the "Low Most Likely" case (red) has a most likely charge less than the minimum. However, a consideration of the probability distributions (triangular distributions in this example) can alter our perception of what is "low risk" and what is "high risk."]] | [[File:H4CH12FG1.JPG|thumb|300px|{{figure number|1}}Illustration of the importance of considering uncertainty in an analysis. The "High Most Likely" case (green) has a most likely charge greater than the minimum and the "Low Most Likely" case (red) has a most likely charge less than the minimum. However, a consideration of the probability distributions (triangular distributions in this example) can alter our perception of what is "low risk" and what is "high risk."]] | ||

| − | Why consider uncertainty? Is not a single deterministic case sufficient for analysis? Consider the simple case of estimating charge volume to a trap. The necessary minimum charge volume required for success (i.e., low charge risk) and a range associated with this minimum charge have been defined and are illustrated by the vertical black solid and dashed lines, respectively, in [[:file:H4CH12FG1.JPG|Figure 1]]. If a model predicts a charge volume greater than the minimum, then it might be said that little or no charge risk exists. Similarly, if a model predicts a charge volume less than the minimum, then we might say that a significant charge risk exists. These cases are illustrated in Figure 1 and are labeled “low risk” and “high risk,” respectively. However, the perception of what is low risk and what is high risk can change greatly when the probability of an outcome is considered. In this example, the difference between low risk and high risk becomes less definitive, as indicated in Figure 1. Although this is a simplistic illustration, all of the key input parameters in a basin model have the potential to cause this degree of ambiguity in the final results. For that reason, estimates of the range of possible outcomes are as important to the final analysis as estimates of the most likely outcome. | + | Why consider uncertainty? Is not a single deterministic case sufficient for analysis? Consider the simple case of estimating charge volume to a trap. The necessary minimum charge volume required for success (i.e., low charge risk) and a range associated with this minimum charge have been defined and are illustrated by the vertical black solid and dashed lines, respectively, in [[:file:H4CH12FG1.JPG|Figure 1]]. If a model predicts a charge volume greater than the minimum, then it might be said that little or no charge risk exists. Similarly, if a model predicts a charge volume less than the minimum, then we might say that a significant charge risk exists. These cases are illustrated in Figure 1 and are labeled “low risk” and “high risk,” respectively. However, the perception of what is low risk and what is high risk can change greatly when the probability of an outcome is considered. In this example, the difference between low risk and high risk becomes less definitive, as indicated in [[:file:H4CH12FG1.JPG|Figure 1]]. Although this is a simplistic illustration, all of the key input parameters in a basin model have the potential to cause this degree of ambiguity in the final results. For that reason, estimates of the range of possible outcomes are as important to the final analysis as estimates of the most likely outcome. |

| + | |||

| + | ==Handling uncertainty== | ||

| + | The degree of effort required to adequately handle uncertainty will vary for different parameters and model objectives. In thinking about how to quantify uncertainty, it is commonly useful to divide input parameters into two groups: (1) scalars and (2) maps and volumes. Assigning and propagating uncertainties in scalar values is rather straightforward; assigning and propagating uncertainties in maps and volumes is far less straightforward. | ||

| + | |||

| + | Examples of scalars in a model are the age of a surface or an event or the coefficient in a rock property equation (e.g., compaction, thermal conductivity, or permeability). Probability distribution functions can be used to describe most likely values and the estimated range of scalar values. These distribution functions are used to describe the range and likelihood of values and then to propagate the described uncertainty through a basin model. Simple distributions such as uniform (rectangular), triangular, normal, and log normal are commonly used, but any distribution can be used as long as it honors the observed data while properly accounting for any dependencies. | ||

| + | |||

| + | Examples of maps and volumes include depth surfaces (including paleo–water depths), facies distributions, and basal heat-flow maps. In general, quantifying uncertainties in maps and volumes is much more difficult than quantifying uncertainty in scalars because they consist of multiple values and the spatial dependencies of these values are commonly not easily characterized. Varying each location in a map or volume independently of others is not an ideal solution as this does not consider spatial dependencies. Several approaches have been used in the industry to address the issues associated with maps and volumes. These approaches include simple additive and multiplicative factors, geostatistical approaches, forward modeling, and weighting of multiple scenarios. | ||

| + | |||

| + | Consider two common cases: (1) uncertainty in the depth to a surface generated during a two-dimensional seismic interpretation and (2) uncertainty in the distribution of lithologies within an isopach. Various approaches are available to address each case. In the first case, three primary sources of uncertainty are present: (1) picking the correct surface from the seismic and carrying it throughout the data, (2) interpolation of the surface between the data constraints, and (3) the time-to-depth conversion. In the second case, a different set of uncertainty issues present themselves. Uncertainty in the values of the facies properties and uncertainty in the spatial distribution of those values exist. | ||

| + | |||

| + | Options for handling uncertainty in the depth to a surface might include using a stochastic additive or multiplicative factor to handle uncertainties in interval velocities and interpolation uncertainties using a conditional geostatistical technique (such as Gaussian simulation) to handle estimation away from well control and/or generating multiple scenarios to handle uncertainty in interpretation. Options for handling uncertainty in the distribution of lithologies might include using a conditional geostatistical technique to handle estimation away from data control, forward modeling to handle extrapolation to areas with no data control, and generation of multiple scenarios to handle uncertainty in interpretation and understanding. The best approach in any given situation depends on the type of input parameter under consideration and the available data. | ||

| + | |||

| + | ==Approach== | ||

| + | The workflow presented involves the following steps: | ||

| + | |||

| + | Step 1: Identify the purpose(s) of the model. | ||

| + | |||

| + | Step 2: Develop a base-case scenario. | ||

| + | * The objective of this step is to build a base-case model to be used in subsequent sensitivity analyses. | ||

| + | |||

| + | Step 3: Identify input parameters whose uncertainty might affect the output property of interest and estimate the preliminary ranges of uncertainty for these input parameters. | ||

| + | |||

| + | Step 4: Perform screening simulations to identify key input parameters. | ||

| + | * In the screening step, the parameters whose uncertainty has a significant effect on the output property of interest are identified. The output property of interest should be selected with care and in light of the overall objective of the modeling exercise. For example, given the same model, the key uncertainties are likely to be different depending on whether prediction of overpressure, hydrocarbon charge, or oil quality motivated the construction of the model. The method involves conducting a set of deterministic simulations where each parameter is independently varied from the minimum to the maximum value. The results are plotted using a tornado or similar plot to aid in identification of parameters whose uncertainty has the greatest effect on the desired result. In this evaluation, it is important to consider potential nonlinearities in parameter behavior and to reevaluate the notional base-case model created in step 2. | ||

| + | |||

| + | Step 5: Evaluate the range of uncertainty in key input parameters. | ||

| + | * Once the key parameters have been identified, uncertainties in these parameters are quantified and assigned. The quantification in this step is generally more rigorous than in step 3 (preliminary screening). Quantification typically involves selecting and populating a probability distribution (e.g., uniform, triangular, normal) for each of the key input parameters identified. | ||

| + | |||

| + | Step 6: Propagate the uncertainty in key input parameters through to the output properties of interest via Monte Carlo or similar analysis. | ||

| + | * The final step is a Monte Carlo simulation where values for the input parameters identified in step 4 are randomly selected from the distributions assigned in step 5. The key results from each realization are saved for subsequent evaluation. | ||

| + | |||

| + | Step 7: Iterate as needed to fine tune the input parameters and dependencies between input parameters, to fine tune error bars and weights for calibration data, and to improve the base-case scenario. | ||

| + | * Although several approaches could be used to quantify uncertainty in models, the approach presented uses Monte Carlo simulation. Monte Carlo simulation has the advantage of being (1) able to handle any probability distribution function, (2) able to account for dependencies between variables, and (3) straightforward to implement. It is also typically straightforward to analyze the results of the Monte Carlo simulation. The disadvantages include that it may require a large number of realizations to adequately sample the possible solution space, and it may be difficult to adequately develop probability distribution functions or the required realizations, particularly for maps and volumes. | ||

| + | |||

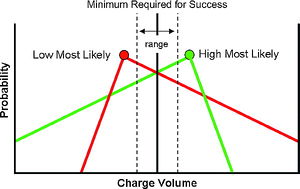

| + | [[File:H4CH12FG2.JPG|thumb|300px|{{figure number|2}}Present-day depth structure map (meters) of the key migration surface. The outlines of the drainage polygons are shown in black, the closures are outlined in red, and the escape paths are shown in green. The scale bar in the legend represents 12,500 m (41,010 ft).]] | ||

| + | |||

| + | ==Hypothetical example== | ||

| + | A hypothetical example is presented to illustrate the approach described. Although the geology is synthetic, it was constructed with realistic basin modeling issues in mind. In this example, the traps of interest formed about 15 Ma. The primary question addressed by the model is, “What is the volume of oil charge to each of the traps during the last 15 m.y.?” | ||

| + | |||

| + | [[file:H4CH12FG3.JPG|thumb|300px|{{figure number|3}}Cross section through the model along the line AA' shown in [[:file:H4CH12FG2.JPG|Figure 2]].]] | ||

| + | |||

| + | For the purposes of this illustration, the migration analysis has been simplified, and it has been assumed that a present-day map-based drainage analysis is sufficient. A map view of the key surface for the map-based drainage analysis is shown in [[:file:H4CH12FG2.JPG|Figure 2]], and a cross section through the model is shown in [[:file:H4CH12FG3.JPG|Figure 3]]. A burial history curve at location X in [[:file:H4CH12FG2.JPG|Figure 2]] is shown in [[:file:H4CH12FG4.JPG|Figure 4]]. Also shown in [[:file:H4CH12FG4.JPG|Figure 4]] are three potential hydrocarbon source rocks, Upper [[Jurassic]], Lower [[Cretaceous]], and lower [[Miocene]]. The sources are modeled as uniformly distributed [[marine]] [[source rock]]s with some terrigenous input. | ||

| + | |||

| + | [[File:H4CH12FG4.JPG|thumb|300px|{{figure number|4}}Burial history curve for location X represented by the dot in [[:file:H4CH12FG2.JPG|Figure 2]]. [[Source rock]]s are in the middle of each indicated isopachs.]] | ||

| + | |||

| + | ===Step 1: identify the purpose of the model=== | ||

| + | As previously stated, the purpose of this model is to estimate the volume of oil charge to individual traps during the last 15 m.y. | ||

| + | |||

| + | ===Step 2: develop a base-case scenario=== | ||

| + | The next step is to develop and calibrate a base-case scenario. Values for the selected parameters used in this example are listed in the "Most Likely" column of Table 2. In this hypothetical model, only one calibration point is present, so a match to the data is relatively straightforward, but is also nonunique (Figure 5). The uncertainty around the single temperature measurement (±15°C) is indicated by the error bars. | ||

==References== | ==References== | ||

Revision as of 20:24, 9 July 2015

This article is under construction

| Basin Modeling: New Horizons in Research and Applications | |

| 120px | |

| Series | Hedberg |

|---|---|

| Chapter | Identifying and Quantifying Significant Uncertainties in Basin Modeling |

| Author | P. J. Hicks Jr., C. M. Fraticelli, J. D. Shosa, M. J. Hardy, and M. B. Townsley |

| Link | Web page |

| Store | AAPG Store |

Identifying and quantifying significant uncertainties in basin modeling, 2012, Hicks, P. J. Jr., C. M. Fraticelli, J. D. Shosa, M. J. Hardy, and M. B. Townsley, in Peters, Kenneth E., David J. Curry, and Marek Kacewicz, eds., Basin modeling: New horizons in research and applications: AAPG Hedberg Series no. 4, p. 207-219.

Basin modeling is an increasingly important element of exploration, development, and production workflows. Problems addressed with basin models typically include questions regarding burial history, source maturation, hydrocarbon yields (timing and volume), hydrocarbon migration, hydrocarbon type and quality, reservoir quality, and reservoir pressure and temperature prediction for pre–drill analysis. As computing power and software capabilities increase, the size and complexity of basin models also increase. These larger, more complex models address multiple scales (well to basin) and problems of variable intricacy, making it more important than ever to understand how the uncertainties in input parameters affect model results.

Increasingly complex basin models require an ever-increasing number of input parameters with values that are likely to vary both spatially and temporally. Some of the input parameters that are commonly used in basin models and their potential effect on model results are listed in Table 1. For a basin model to be successful, the modeler must not only determine the most appropriate estimate for the value for each input parameter, but must also understand the range of uncertainty associated with these estimates and the uncertainties related to the assumptions, approximations, and mathematical limitations of the software. This second type of uncertainty may involve fundamental physics that are not adequately modeled by the software and/or the numerical schemes used to solve the underlying partial differential equations. Although these issues are not addressed in this article or by the proposed workflow, basin modelers should be aware of these issues and consider them in any final recommendations or conclusions.

| Property | Effect |

|---|---|

| Depths (or isopachs) | Thickness of each unit controls the relative amount of each rock type (see bulk rock properties) and controls the depth and, therefore, temperature and maturity of each stratigraphic unit. |

| Ages | Ages of each surface and event control timing and thereby control the transient behavior in the model. |

Bulk rock properties

|

The bulk rock properties control the thermal and fluid transport properties (thermal conductivity, density, heat capacity, radiogenic heat, and permeability) that control thermal and pressure evolution in the model. |

| Missing section (erosion) | The amount of missing section controls the burial history of sediments below the associated unconformity. The burial history controls bulk rock properties (primarily thorugh the compaction history) and the temperature and pressure through time. |

| Fluid properties | Fluid properties control bulk rock properties in the porous rocks, migration rates, and affect seal capacity. |

Source properties

|

Source properties control the timing, rate, and fluid type for hydrocarbon generation and expulsion from the source rocks. |

Bottom boundary condition

|

The bottom boundary condition directly affects the temperatures throughout the model. The effects are roughly proportional to depth. |

Top boundary

|

Changes in the top temperature boundary condition affect the steady-state temperature through the model ~1:1. Changes in water depth can affect the top temperature boundary condition and the pressure at the mudline. |

Calibration data

|

The quantity of good calibration data has a direct impact on the quality of the model and the reliability of predictions based on model outputs. |

Considering uncertainty

Uncertainty is present in most, if not all, model inputs and calibration data. These uncertainties generate uncertainties in the model outputs. Sometimes, the resultant uncertainties are not significant enough to impact decisions based on the model results. Other times, these uncertainties can make the model results virtually useless in the decision-making process. Of course, a wide range of cases exist between these extremes, and this is where basin modelers commonly work. In these cases, the model results can be useful, but the uncertainties surrounding the model predictions can be difficult to fully grasp and communicate. Successful decisions based on models in which significant uncertainties exist require that the modeler (1) identify and quantify uncertainties in key input parameters, (2) adequately propagate these uncertainties from input through to output, particularly for three-dimensional models, and (3) clearly communicate this information to decision makers.

Several potential pitfalls can be avoided. Some of the most common are:

- not keeping the goal of the model in mind as it is built to direct and focus the appropriate levels of effort during the model construction

- not clearly identifying which uncertainties in input parameters will have significant impacts on the results and subsequent business decisions

- ignoring the uncertainties because not enough time or a lack of knowledge on how to adequately handle them exists

- working hard on issues that we are comfortable of working on, instead of on those that are truly important

- not recognizing feasible alternative scenarios

Why consider uncertainty? Is not a single deterministic case sufficient for analysis? Consider the simple case of estimating charge volume to a trap. The necessary minimum charge volume required for success (i.e., low charge risk) and a range associated with this minimum charge have been defined and are illustrated by the vertical black solid and dashed lines, respectively, in Figure 1. If a model predicts a charge volume greater than the minimum, then it might be said that little or no charge risk exists. Similarly, if a model predicts a charge volume less than the minimum, then we might say that a significant charge risk exists. These cases are illustrated in Figure 1 and are labeled “low risk” and “high risk,” respectively. However, the perception of what is low risk and what is high risk can change greatly when the probability of an outcome is considered. In this example, the difference between low risk and high risk becomes less definitive, as indicated in Figure 1. Although this is a simplistic illustration, all of the key input parameters in a basin model have the potential to cause this degree of ambiguity in the final results. For that reason, estimates of the range of possible outcomes are as important to the final analysis as estimates of the most likely outcome.

Handling uncertainty

The degree of effort required to adequately handle uncertainty will vary for different parameters and model objectives. In thinking about how to quantify uncertainty, it is commonly useful to divide input parameters into two groups: (1) scalars and (2) maps and volumes. Assigning and propagating uncertainties in scalar values is rather straightforward; assigning and propagating uncertainties in maps and volumes is far less straightforward.

Examples of scalars in a model are the age of a surface or an event or the coefficient in a rock property equation (e.g., compaction, thermal conductivity, or permeability). Probability distribution functions can be used to describe most likely values and the estimated range of scalar values. These distribution functions are used to describe the range and likelihood of values and then to propagate the described uncertainty through a basin model. Simple distributions such as uniform (rectangular), triangular, normal, and log normal are commonly used, but any distribution can be used as long as it honors the observed data while properly accounting for any dependencies.

Examples of maps and volumes include depth surfaces (including paleo–water depths), facies distributions, and basal heat-flow maps. In general, quantifying uncertainties in maps and volumes is much more difficult than quantifying uncertainty in scalars because they consist of multiple values and the spatial dependencies of these values are commonly not easily characterized. Varying each location in a map or volume independently of others is not an ideal solution as this does not consider spatial dependencies. Several approaches have been used in the industry to address the issues associated with maps and volumes. These approaches include simple additive and multiplicative factors, geostatistical approaches, forward modeling, and weighting of multiple scenarios.

Consider two common cases: (1) uncertainty in the depth to a surface generated during a two-dimensional seismic interpretation and (2) uncertainty in the distribution of lithologies within an isopach. Various approaches are available to address each case. In the first case, three primary sources of uncertainty are present: (1) picking the correct surface from the seismic and carrying it throughout the data, (2) interpolation of the surface between the data constraints, and (3) the time-to-depth conversion. In the second case, a different set of uncertainty issues present themselves. Uncertainty in the values of the facies properties and uncertainty in the spatial distribution of those values exist.

Options for handling uncertainty in the depth to a surface might include using a stochastic additive or multiplicative factor to handle uncertainties in interval velocities and interpolation uncertainties using a conditional geostatistical technique (such as Gaussian simulation) to handle estimation away from well control and/or generating multiple scenarios to handle uncertainty in interpretation. Options for handling uncertainty in the distribution of lithologies might include using a conditional geostatistical technique to handle estimation away from data control, forward modeling to handle extrapolation to areas with no data control, and generation of multiple scenarios to handle uncertainty in interpretation and understanding. The best approach in any given situation depends on the type of input parameter under consideration and the available data.

Approach

The workflow presented involves the following steps:

Step 1: Identify the purpose(s) of the model.

Step 2: Develop a base-case scenario.

- The objective of this step is to build a base-case model to be used in subsequent sensitivity analyses.

Step 3: Identify input parameters whose uncertainty might affect the output property of interest and estimate the preliminary ranges of uncertainty for these input parameters.

Step 4: Perform screening simulations to identify key input parameters.

- In the screening step, the parameters whose uncertainty has a significant effect on the output property of interest are identified. The output property of interest should be selected with care and in light of the overall objective of the modeling exercise. For example, given the same model, the key uncertainties are likely to be different depending on whether prediction of overpressure, hydrocarbon charge, or oil quality motivated the construction of the model. The method involves conducting a set of deterministic simulations where each parameter is independently varied from the minimum to the maximum value. The results are plotted using a tornado or similar plot to aid in identification of parameters whose uncertainty has the greatest effect on the desired result. In this evaluation, it is important to consider potential nonlinearities in parameter behavior and to reevaluate the notional base-case model created in step 2.

Step 5: Evaluate the range of uncertainty in key input parameters.

- Once the key parameters have been identified, uncertainties in these parameters are quantified and assigned. The quantification in this step is generally more rigorous than in step 3 (preliminary screening). Quantification typically involves selecting and populating a probability distribution (e.g., uniform, triangular, normal) for each of the key input parameters identified.

Step 6: Propagate the uncertainty in key input parameters through to the output properties of interest via Monte Carlo or similar analysis.

- The final step is a Monte Carlo simulation where values for the input parameters identified in step 4 are randomly selected from the distributions assigned in step 5. The key results from each realization are saved for subsequent evaluation.

Step 7: Iterate as needed to fine tune the input parameters and dependencies between input parameters, to fine tune error bars and weights for calibration data, and to improve the base-case scenario.

- Although several approaches could be used to quantify uncertainty in models, the approach presented uses Monte Carlo simulation. Monte Carlo simulation has the advantage of being (1) able to handle any probability distribution function, (2) able to account for dependencies between variables, and (3) straightforward to implement. It is also typically straightforward to analyze the results of the Monte Carlo simulation. The disadvantages include that it may require a large number of realizations to adequately sample the possible solution space, and it may be difficult to adequately develop probability distribution functions or the required realizations, particularly for maps and volumes.

Hypothetical example

A hypothetical example is presented to illustrate the approach described. Although the geology is synthetic, it was constructed with realistic basin modeling issues in mind. In this example, the traps of interest formed about 15 Ma. The primary question addressed by the model is, “What is the volume of oil charge to each of the traps during the last 15 m.y.?”

For the purposes of this illustration, the migration analysis has been simplified, and it has been assumed that a present-day map-based drainage analysis is sufficient. A map view of the key surface for the map-based drainage analysis is shown in Figure 2, and a cross section through the model is shown in Figure 3. A burial history curve at location X in Figure 2 is shown in Figure 4. Also shown in Figure 4 are three potential hydrocarbon source rocks, Upper Jurassic, Lower Cretaceous, and lower Miocene. The sources are modeled as uniformly distributed marine source rocks with some terrigenous input.

Step 1: identify the purpose of the model

As previously stated, the purpose of this model is to estimate the volume of oil charge to individual traps during the last 15 m.y.

Step 2: develop a base-case scenario

The next step is to develop and calibrate a base-case scenario. Values for the selected parameters used in this example are listed in the "Most Likely" column of Table 2. In this hypothetical model, only one calibration point is present, so a match to the data is relatively straightforward, but is also nonunique (Figure 5). The uncertainty around the single temperature measurement (±15°C) is indicated by the error bars.